This content is powered by Balige Publishing. Visit this link (collaboration with Rismon Hasiholan Sianipar)

Define histodetection class in a Python file and save it as histodetection.py:

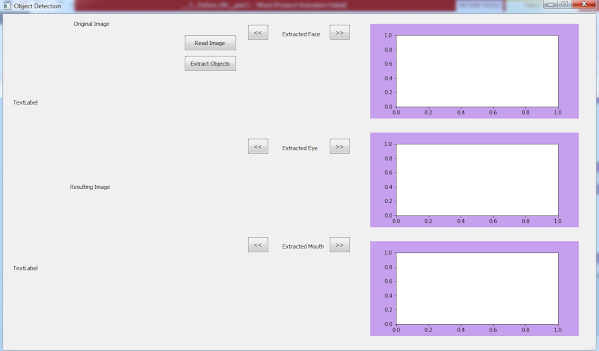

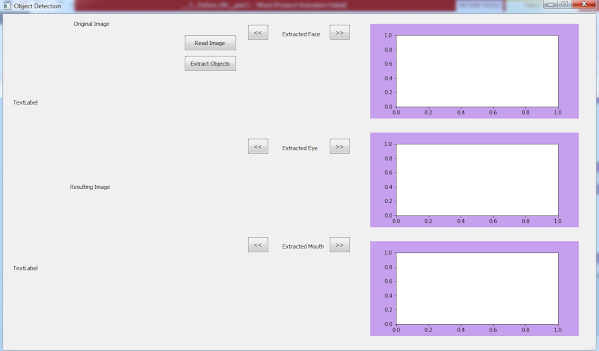

Run object_detection.py to see the form as shown in Figure below.

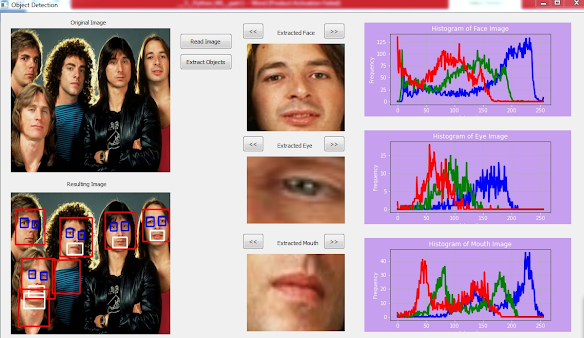

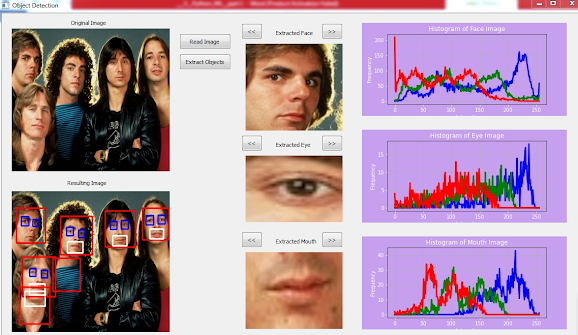

Run object_detection.py and choose a test image. Then clik Extract Objects button to see every extracted face, eye, and mouth and their corresponding histogram as shown in Figure below.

Part 1

Tutorial Steps To Extract Detected Objects

Onto object_detection.ui form, place one Push Button widget. Set its text property as Extract Objects and its objectName property as pbExtracObjects.

Add three new Label widgets onto form and set their objectName properties as labelFace, labelEye, and labelMouth.

Then, add six new Push Button widgets onto form and set their objectName properties as pbPrevFace, pbNextFace, pbPrevEye, pbNextEye, pbPrevMouth, and pbNextMouth.

Put three Widgets from the Containers panel on the form. Set their objectName property as histFace, histEye, and histMouth.

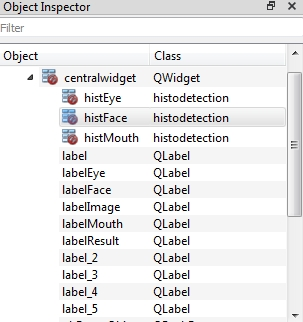

Next, right-click on the three Widgets and from the context menu displayed select Promote to ... . Name the Promoted class name as histodetection. Then click Add button and click the Promote button. In the Object Inspector window, you can see that histFace, histEye, and histMouth (histodetection class) along with other widgets are in the centralwidget object (QWidget class) as shown in Figure below.

Define histodetection class in a Python file and save it as histodetection.py:

# histodetection.py from PyQt5.QtWidgets import* from matplotlib.backends.backend_qt5agg import FigureCanvas from matplotlib.figure import Figure class histodetection(QWidget): def __init__(self, parent = None): QWidget.__init__(self, parent) self.canvas = FigureCanvas(Figure()) vertical_layout = QVBoxLayout() vertical_layout.addWidget(self.canvas) self.canvas.axes1 = self.canvas.figure.add_subplot(111) self.canvas.figure.set_facecolor("xkcd:lavender") self.setLayout(vertical_layout)

Run object_detection.py to see the form as shown in Figure below.

Define a new method, initialization(), to initialize some variables as follows:

def initialization(self):self.ROI_face = 0self.listFaces=[]self.listFaceItem = 0self.ROI_eye = 0self.listEyes=[]self.listEyeItem = 0self.ROI_mouth = 0self.listMouths=[]self.listMouthItem = 0self.labelFace.clear()self.labelEye.clear()self.labelMouth.clear()

Define three new methods, face_extraction(), eye_extraction(), and mouth_extraction(), to save detected objects into png file and each file name into corresponding list as follows:

def face_extraction(self,img):for (x,y,w,h) in self.faces:roi_color = img[y:y+h, x:x+w]#saved into file .pngcv2.imwrite('ROI_Face{}.png'.format(self.ROI_face), \cv2.cvtColor(roi_color, cv2.COLOR_BGR2RGB))#saved into listself.listFaces.append(\'ROI_Face{}.png'.format(self.ROI_face))self.ROI_face += 1def eye_extraction(self,img):for (x,y,w,h) in self.eyes:roi_color = img[y:y+h, x:x+w]#saved into file .pngcv2.imwrite('ROI_Eye{}.png'.format(self.ROI_eye), \cv2.cvtColor(roi_color, cv2.COLOR_BGR2RGB))#saved into listself.listEyes.append(\'ROI_Eye{}.png'.format(self.ROI_eye))self.ROI_eye += 1def mouth_extraction(self,img):for (x,y,w,h) in self.mouths:roi_color = img[y:y+h, x:x+w]#saved into file .pngcv2.imwrite('ROI_Mouth{}.png'.format(self.ROI_mouth), \cv2.cvtColor(roi_color, cv2.COLOR_BGR2RGB))#saved into listself.listMouths.append(\'ROI_Mouth{}.png'.format(self.ROI_mouth))self.ROI_mouth += 1

Modify object_detection() method to invoke the three extrating methods as shown in line 12, 27 and 41 as follows:

|

|

Define display_histogram() method to display any input image as follows:

def display_histogram(self, img, qwidget1, title): qwidget1.canvas.axes1.clear() channel = len(img.shape) if channel == 2: #grayscale image histr = cv2.calcHist([img],[0],None,[256],[0,256]) qwidget1.canvas.axes1.plot(histr,\ color = 'yellow',linewidth=3.0) qwidget1.canvas.axes1.set_ylabel('Frequency',\ color='white') qwidget1.canvas.axes1.set_xlabel('Intensity', \ color='white') qwidget1.canvas.axes1.tick_params(axis='x', colors='white') qwidget1.canvas.axes1.tick_params(axis='y', colors='white') qwidget1.canvas.axes1.set_title(title,color='white') qwidget1.canvas.axes1.set_facecolor('xkcd:lavender') qwidget1.canvas.axes1.grid() qwidget1.canvas.draw() else : #color image color = ('b','g','r') for i,col in enumerate(color): histr = cv2.calcHist([img],[i],None,[256],[0,256]) qwidget1.canvas.axes1.plot(histr,\ color = col,linewidth=3.0) qwidget1.canvas.axes1.set_ylabel('Frequency',\ color='white') qwidget1.canvas.axes1.set_xlabel('Intensity', \ color='white') qwidget1.canvas.axes1.tick_params(axis='x', \ colors='white') qwidget1.canvas.axes1.tick_params(axis='y', \ colors='white') qwidget1.canvas.axes1.set_title(title,color='white') qwidget1.canvas.axes1.set_facecolor('xkcd:lavender') qwidget1.canvas.axes1.grid() qwidget1.canvas.draw()

Define navigational method for every navigational button as follows:

def display_face_prev(self): list_len = len(self.listFaces) if self.listFaceItem != 0: self.listFaceItem -= 1 else: self.listFaceItem = list_len - 1 extracted_img = cv2.imread(self.listFaces[self.listFaceItem], \ cv2.IMREAD_COLOR) extracted_img = cv2.cvtColor(extracted_img, cv2.COLOR_BGR2RGB) self.display_resulting_image(extracted_img,self.labelFace) self.display_histogram(extracted_img, self.histFace, \ 'Histogram of Face Image') def display_face_next(self): list_len = len(self.listFaces) if list_len != self.listFaceItem+1: self.listFaceItem += 1 else: self.listFaceItem = 0 extracted_img = cv2.imread(self.listFaces[self.listFaceItem], \ cv2.IMREAD_COLOR) extracted_img = cv2.cvtColor(extracted_img, cv2.COLOR_BGR2RGB) self.display_resulting_image(extracted_img,self.labelFace) self.display_histogram(extracted_img, self.histFace, \ 'Histogram of Face Image') def display_eye_next(self): list_len = len(self.listEyes) if list_len != self.listEyeItem+1: self.listEyeItem += 1 else: self.listEyeItem = 0 extracted_img = cv2.imread(self.listEyes[self.listEyeItem], \ cv2.IMREAD_COLOR) extracted_img = cv2.cvtColor(extracted_img, cv2.COLOR_BGR2RGB) self.display_resulting_image(extracted_img,self.labelEye) self.display_histogram(extracted_img, self.histEye, \ 'Histogram of Eye Image') def display_eye_prev(self): list_len = len(self.listEyes) if self.listEyeItem != 0: self.listEyeItem -= 1 else: self.listEyeItem = list_len - 1 extracted_img = cv2.imread(self.listEyes[self.listEyeItem], \ cv2.IMREAD_COLOR) extracted_img = cv2.cvtColor(extracted_img, cv2.COLOR_BGR2RGB) self.display_resulting_image(extracted_img,self.labelEye) self.display_histogram(extracted_img, self.histEye, \ 'Histogram of Eye Image') def display_mouth_next(self): list_len = len(self.listMouths) if list_len != self.listMouthItem+1: self.listMouthItem += 1 else: self.listMouthItem = 0 extracted_img = cv2.imread(self.listMouths[self.listMouthItem],\ cv2.IMREAD_COLOR) extracted_img = cv2.cvtColor(extracted_img, cv2.COLOR_BGR2RGB) self.display_resulting_image(extracted_img,self.labelMouth) self.display_histogram(extracted_img, self.histMouth, \ 'Histogram of Mouth Image') def display_mouth_prev(self): list_len = len(self.listMouths) if self.listMouthItem != 0: self.listMouthItem -= 1 else: self.listMouthItem = list_len - 1 extracted_img = cv2.imread(self.listMouths[self.listMouthItem],\ cv2.IMREAD_COLOR) extracted_img = cv2.cvtColor(extracted_img, cv2.COLOR_BGR2RGB) self.display_resulting_image(extracted_img,self.labelMouth) self.display_histogram(extracted_img, self.histMouth, \ 'Histogram of Mouth Image')

Define display_objects() to invoke the three forward navigational methods:

def display_objects(self): self.display_face_next() self.display_eye_next() self.display_mouth_next()

Connect clicked() event of pbExtracObjects widget to display_objects() method and put it inside __init__() method:

self.pbExtracObjects.clicked.connect(self.display_objects)

Connect clicked() event of every navigational push button widget to corresponding navigational method and put it inside __init__() method as follows:

self.pbNextFace.clicked.connect(self.display_face_next) self.pbPrevFace.clicked.connect(self.display_face_prev) self.pbNextEye.clicked.connect(self.display_eye_next) self.pbPrevEye.clicked.connect(self.display_eye_prev) self.pbNextMouth.clicked.connect(self.display_mouth_next) self.pbPrevMouth.clicked.connect(self.display_mouth_prev)

Run object_detection.py and choose a test image. Then clik Extract Objects button to see every extracted face, eye, and mouth and their corresponding histogram as shown in Figure below.

The following is the full script of object_detection.py so far:

#object_detection.py import sys import cv2 import numpy as np from PyQt5.QtWidgets import* from PyQt5 import QtGui, QtCore from PyQt5.uic import loadUi from matplotlib.backends.backend_qt5agg import (NavigationToolbar2QT as NavigationToolbar) from PyQt5.QtWidgets import QDialog, QFileDialog from PyQt5.QtGui import QIcon, QPixmap, QImage from PyQt5.uic import loadUi class FormObjectDetection(QMainWindow): def __init__(self): QMainWindow.__init__(self) loadUi("object_detection1.ui",self) self.setWindowTitle("Object Detection") self.face_cascade = cv2.CascadeClassifier(cv2.data.haarcascades + \ 'haarcascade_frontalface_default.xml') if self.face_cascade.empty(): raise IOError('Unable to load the face cascade classifier xml file') self.eye_cascade = cv2.CascadeClassifier(cv2.data.haarcascades + \ 'haarcascade_eye.xml') if self.eye_cascade.empty(): raise IOError('Unable to load the eye cascade classifier xml file') # Downloaded from https://github.com/dasaanand/OpenCV/blob/master/EmotionDet/build/Debug/EmotionDet.app/Contents/Resources/haarcascade_mouth.xml self.mouth_cascade = cv2.CascadeClassifier('haarcascade_mouth.xml') if self.mouth_cascade.empty(): raise IOError('Unable to load the mouth cascade classifier xml file') self.pbReadImage.clicked.connect(self.read_image) self.pbExtracObjects.clicked.connect(self.display_objects) self.pbNextFace.clicked.connect(self.display_face_next) self.pbPrevFace.clicked.connect(self.display_face_prev) self.pbNextEye.clicked.connect(self.display_eye_next) self.pbPrevEye.clicked.connect(self.display_eye_prev) self.pbNextMouth.clicked.connect(self.display_mouth_next) self.pbPrevMouth.clicked.connect(self.display_mouth_prev) self.initialization() def initialization(self): self.ROI_face = 0 self.listFaces=[] self.listFaceItem = 0 self.ROI_eye = 0 self.listEyes=[] self.listEyeItem = 0 self.ROI_mouth = 0 self.listMouths=[] self.listMouthItem = 0 self.labelFace.clear() self.labelEye.clear() self.labelMouth.clear() def display_objects(self): self.display_face_next() self.display_eye_next() self.display_mouth_next() def read_image(self): self.initialization() self.fname = QFileDialog.getOpenFileName(self, 'Open file', 'd:\\',"Image Files (*.jpg *.gif *.bmp *.png)") self.pixmap = QPixmap(self.fname[0]) self.labelImage.setPixmap(self.pixmap) self.labelImage.setScaledContents(True) self.img = cv2.imread(self.fname[0], cv2.IMREAD_COLOR) self.do_detection() def display_resulting_image(self, img,label): height, width, channel = img.shape bytesPerLine = 3 * width cv2.cvtColor(img, cv2.COLOR_BGR2RGB, img) qImg = QImage(img, width, height, \ bytesPerLine, QImage.Format_RGB888) pixmap = QPixmap.fromImage(qImg) label.setPixmap(pixmap) label.setScaledContents(True) def face_extraction(self,img): for (x,y,w,h) in self.faces: roi_color = img[y:y+h, x:x+w] #saved into file .png cv2.imwrite('ROI_Face{}.png'.format(self.ROI_face), \ cv2.cvtColor(roi_color, cv2.COLOR_BGR2RGB)) #saved into list self.listFaces.append('ROI_Face{}.png'.format(self.ROI_face)) self.ROI_face += 1 def eye_extraction(self,img): for (x,y,w,h) in self.eyes: roi_color = img[y:y+h, x:x+w] #saved into file .png cv2.imwrite('ROI_Eye{}.png'.format(self.ROI_eye), \ cv2.cvtColor(roi_color, cv2.COLOR_BGR2RGB)) #saved into list self.listEyes.append('ROI_Eye{}.png'.format(self.ROI_eye)) self.ROI_eye += 1 def mouth_extraction(self,img): for (x,y,w,h) in self.mouths: roi_color = img[y:y+h, x:x+w] #saved into file .png cv2.imwrite('ROI_Mouth{}.png'.format(self.ROI_mouth), \ cv2.cvtColor(roi_color, cv2.COLOR_BGR2RGB)) #saved into list self.listMouths.append('ROI_Mouth{}.png'.format(self.ROI_mouth)) self.ROI_mouth += 1 def display_face_prev(self): list_len = len(self.listFaces) if self.listFaceItem != 0: self.listFaceItem -= 1 else: self.listFaceItem = list_len - 1 extracted_img = cv2.imread(self.listFaces[self.listFaceItem], \ cv2.IMREAD_COLOR) extracted_img = cv2.cvtColor(extracted_img, cv2.COLOR_BGR2RGB) self.display_resulting_image(extracted_img,self.labelFace) self.display_histogram(extracted_img, self.histFace, \ 'Histogram of Face Image') def display_face_next(self): list_len = len(self.listFaces) if list_len != self.listFaceItem+1: self.listFaceItem += 1 else: self.listFaceItem = 0 extracted_img = cv2.imread(self.listFaces[self.listFaceItem], \ cv2.IMREAD_COLOR) extracted_img = cv2.cvtColor(extracted_img, cv2.COLOR_BGR2RGB) self.display_resulting_image(extracted_img,self.labelFace) self.display_histogram(extracted_img, self.histFace, \ 'Histogram of Face Image') def display_eye_next(self): list_len = len(self.listEyes) if list_len != self.listEyeItem+1: self.listEyeItem += 1 else: self.listEyeItem = 0 extracted_img = cv2.imread(self.listEyes[self.listEyeItem], \ cv2.IMREAD_COLOR) extracted_img = cv2.cvtColor(extracted_img, cv2.COLOR_BGR2RGB) self.display_resulting_image(extracted_img,self.labelEye) self.display_histogram(extracted_img, self.histEye, \ 'Histogram of Eye Image') def display_eye_prev(self): list_len = len(self.listEyes) if self.listEyeItem != 0: self.listEyeItem -= 1 else: self.listEyeItem = list_len - 1 extracted_img = cv2.imread(self.listEyes[self.listEyeItem], \ cv2.IMREAD_COLOR) extracted_img = cv2.cvtColor(extracted_img, cv2.COLOR_BGR2RGB) self.display_resulting_image(extracted_img,self.labelEye) self.display_histogram(extracted_img, self.histEye, \ 'Histogram of Eye Image') def display_mouth_next(self): list_len = len(self.listMouths) if list_len != self.listMouthItem+1: self.listMouthItem += 1 else: self.listMouthItem = 0 extracted_img = cv2.imread(self.listMouths[self.listMouthItem], \ cv2.IMREAD_COLOR) extracted_img = cv2.cvtColor(extracted_img, cv2.COLOR_BGR2RGB) self.display_resulting_image(extracted_img,self.labelMouth) self.display_histogram(extracted_img, self.histMouth, \ 'Histogram of Mouth Image') def display_mouth_prev(self): list_len = len(self.listMouths) if self.listMouthItem != 0: self.listMouthItem -= 1 else: self.listMouthItem = list_len - 1 extracted_img = cv2.imread(self.listMouths[self.listMouthItem], \ cv2.IMREAD_COLOR) extracted_img = cv2.cvtColor(extracted_img, cv2.COLOR_BGR2RGB) self.display_resulting_image(extracted_img,self.labelMouth) self.display_histogram(extracted_img, self.histMouth, \ 'Histogram of Mouth Image') def object_detection(self,img): # Converts from BGR space color into Gray gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) self.faces = self.face_cascade.detectMultiScale(img, \ scaleFactor=1.1, \ minNeighbors=5, \ minSize=(20, 20), \ flags=cv2.CASCADE_SCALE_IMAGE) #extracts faces self.face_extraction(img) for (x,y,w,h) in self.faces: img = cv2.rectangle(img,(x,y),(x+w,y+h),(0,0,255),3) roi_gray = gray[y:y+h, x:x+w] roi_color = img[y:y+h, x:x+w] #detecting eyes self.eyes = self.eye_cascade.detectMultiScale(roi_color, \ scaleFactor=1.1, \ minNeighbors=5, \ minSize=(10, 10), \ flags=cv2.CASCADE_SCALE_IMAGE) #extracts eyes self.eye_extraction(roi_color) for (ex,ey,ew,eh) in self.eyes: cv2.rectangle(roi_color,(ex,ey),(ex+ew,ey+eh),(255,0,0),3) #detecting mouth self.mouths = self.mouth_cascade.detectMultiScale(roi_color, \ scaleFactor=1.2, \ minNeighbors=5, \ minSize=(30, 30), \ flags=cv2.CASCADE_SCALE_IMAGE) #extracts mouths self.mouth_extraction(roi_color) for (mx,my,mw,mh) in self.mouths: cv2.rectangle(roi_color,(mx,my),\ (mx+mw,my+mh),(255,255,255),3) self.display_resulting_image(img,self.labelResult) def do_detection(self): test = self.img self.object_detection(test) def display_histogram(self, img, qwidget1, title): qwidget1.canvas.axes1.clear() channel = len(img.shape) if channel == 2: #grayscale image histr = cv2.calcHist([img],[0],None,[256],[0,256]) qwidget1.canvas.axes1.plot(histr,\ color = 'yellow',linewidth=3.0) qwidget1.canvas.axes1.set_ylabel('Frequency',\ color='white') qwidget1.canvas.axes1.set_xlabel('Intensity', \ color='white') qwidget1.canvas.axes1.tick_params(axis='x', colors='white') qwidget1.canvas.axes1.tick_params(axis='y', colors='white') qwidget1.canvas.axes1.set_title(title,color='white') qwidget1.canvas.axes1.set_facecolor('xkcd:lavender') qwidget1.canvas.axes1.grid() qwidget1.canvas.draw() else : #color image color = ('b','g','r') for i,col in enumerate(color): histr = cv2.calcHist([img],[i],None,[256],[0,256]) qwidget1.canvas.axes1.plot(histr,\ color = col,linewidth=3.0) qwidget1.canvas.axes1.set_ylabel('Frequency',\ color='white') qwidget1.canvas.axes1.set_xlabel('Intensity', \ color='white') qwidget1.canvas.axes1.tick_params(axis='x', \ colors='white') qwidget1.canvas.axes1.tick_params(axis='y', \ colors='white') qwidget1.canvas.axes1.set_title(title,color='white') qwidget1.canvas.axes1.set_facecolor('xkcd:lavender') qwidget1.canvas.axes1.grid() qwidget1.canvas.draw() if __name__=="__main__": app = QApplication(sys.argv) w = FormObjectDetection() w.show() sys.exit(app.exec_())