This content is powered by Balige Publishing. Visit this link (collaboration with Rismon Hasiholan Sianipar).

Step 1: Now, you will create a GUI to implement how to detect license plate. Open Qt Designer and choose Main Window template. Save the form as gui_plate.ui.

Step 2: Download dataset from https://www.kaggle.com/andrewmvd/car-plate-detection/download and save it to your working directory. Unzip file and place two folders, annotations and images, into working directory.

Step 3: Put one Widget from Containers panel onto form and set its ObjectName property as widgetPlate. You will use this widget to plot some images.

Step 4: Put three Push Button widgets onto form. Set their text properties as LOAD DATA, TRAIN MODEL, and OPEN TEST IMAGE. Set their objectName properties as pbLoad, pbTrain, and pbOpen.

Step 5: Put one List Widget onto form and set its objectName property as lwData. Populate this widget with three items as shown in Figure below.

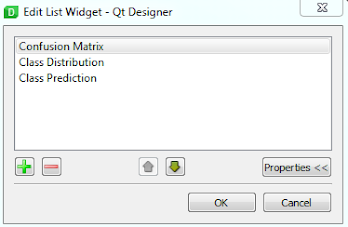

Step 6: Put another List Widget onto form and set its objectName property as lwPlot. Populate this widget with three items as shown in Figure below.

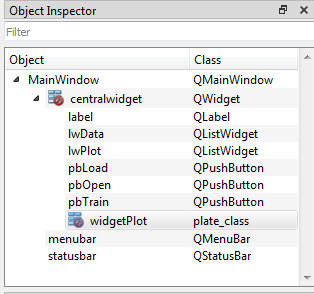

Step 7: Right click on widgetPlate and choose Promote to …. Set Promoted class name as plate_class. Click Add and Promote button. In Object Inspector window, you can see that widgetPlate is now an object of plate_class as shown in Figure below.

Step 8: Write the definition of plate_class and save it as plate_class.py as follows:

#plate_class.py from PyQt5.QtWidgets import* from matplotlib.backends.backend_qt5agg import FigureCanvas from matplotlib.figure import Figure class plate_class(QWidget): def __init__(self, parent = None): QWidget.__init__(self, parent) self.canvas = FigureCanvas(Figure()) vertical_layout = QVBoxLayout() vertical_layout.addWidget(self.canvas) self.canvas.axis1 = self.canvas.figure.add_subplot(111) self.canvas.axis1 = self.canvas.figure.subplots_adjust( top=0.981, bottom=0.049, left=0.042, right=0.981, hspace=0.2, wspace=0.2 ) self.canvas.figure.set_facecolor("xkcd:salmon") self.setLayout(vertical_layout)

Step 9: Write this Python script and save it as detect_plate_gui.py:

#detect_plate_gui.py from PyQt5.QtWidgets import * from PyQt5.uic import loadUi from matplotlib.backends.backend_qt5agg import (NavigationToolbar2QT as NavigationToolbar) from matplotlib.colors import ListedColormap class DemoGUI_DetectPlate(QMainWindow): def __init__(self): QMainWindow.__init__(self) loadUi("gui_plate.ui",self) self.setWindowTitle("GUI Demo of Detecting License Plate") self.addToolBar(NavigationToolbar(self.widgetPlot.canvas, self)) self.set_state(False) def set_state(self, state): self.pbTrain.setEnabled(state) self.pbOpen.setEnabled(state) self.lwData.setEnabled(state) self.lwPlot.setEnabled(state) self.widgetPlot.setEnabled(state) if __name__ == '__main__': import sys app = QApplication(sys.argv) ex = DemoGUI_DetectPlate() ex.show() sys.exit(app.exec_())

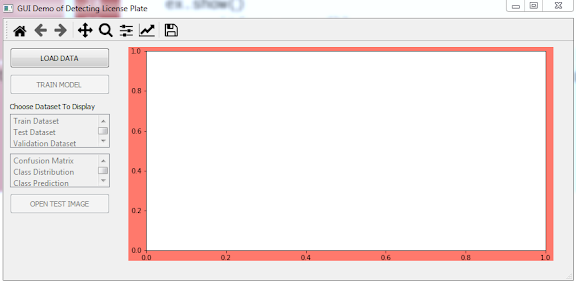

Step 10: Run detect_plate_gui.py to see the state of form when it first runs as shown in Figure below

Step 11: Import all the modules that are needed for this project:

import seaborn as sns import cv2 import os from os import path import glob from random import seed from random import randint from lxml import etree from sklearn.model_selection import train_test_split from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense, Flatten from tensorflow.keras.applications.vgg16 import VGG16 from tensorflow.keras.models import load_model import pandas as pd import numpy as np from matplotlib import pyplot as plt

Step 12: Define initialize() method to create important variables to determine directory of images, image dimension, number of epochs, and batch size:

def initialize(self): self.batchsize = 32 self.NUM_EPOCHS = 50 self.curr_path = os.getcwd() self.IMAGE_SIZE = 200 img_dir = self.curr_path + "/images" # Enter Directory of all images data_path = os.path.join(img_dir,'*g') self.files = glob.glob(data_path)

Step 13: Invoke initialize() method and put it inside __init__() method as shown in line 8:

1 2 3 4 5 6 7 8 | def __init__(self): QMainWindow.__init__(self) loadUi("gui_plate.ui",self) self.setWindowTitle("GUI Demo of Detecting License Plate") self.addToolBar(NavigationToolbar(self.widgetPlot.canvas, self)) self.set_state(False) self.initialize() |

Step 14: Define generate_X() function to generat X as a numpy array containing all sorted images in alphabetical order to match them to the xml files containing the annotations of the bounding boxes:

def generate_X(self,files): #Sorts the images in alphabetical order to match them to #the xml files containing the annotations of the bounding boxes files.sort() X=[] for f1 in files: img = cv2.imread(f1) img = cv2.resize(img, (self.IMAGE_SIZE,self.IMAGE_SIZE)) X.append(np.array(img)) return X

Step 15: Define resize_annotation() function to return a list containing resized xmax, ymax, xmin, and ymin elements in every xml file:

def resize_annotation(self, f): tree = etree.parse(f) for dim in tree.xpath("size"): width = int(dim.xpath("width")[0].text) height = int(dim.xpath("height")[0].text) for dim in tree.xpath("object/bndbox"): xmin = int(dim.xpath("xmin")[0].text)/(width/self.IMAGE_SIZE) ymin = int(dim.xpath("ymin")[0].text)/(height/self.IMAGE_SIZE) xmax = int(dim.xpath("xmax")[0].text)/(width/self.IMAGE_SIZE) ymax = int(dim.xpath("ymax")[0].text)/(height/self.IMAGE_SIZE) return [int(xmax), int(ymax), int(xmin), int(ymin)]

Step 15: Define generate_y() function to invoke resize_annotation() on every xml file in annotations folder:

def generate_y(self): path = self.curr_path + '/annotations' text_files = [self.curr_path + '/annotations/'+f \ for f in sorted(os.listdir(path))] y=[] for i in text_files: y.append(self.resize_annotation(i)) return y

Step 16: Define load_data() method to generate training, test, and validation data and save them into npy files:

def load_data(self): X = self.generate_X(self.files) y = self.generate_y() #Transforms into array X=np.array(X) y=np.array(y) #Normalisation X = X / 255 y = y / 255 X_train, X_test, y_train, y_test = train_test_split(X, y, \ test_size=0.2, random_state=1) X_train, X_val, y_train, y_val = train_test_split(X_train, \ y_train, test_size=0.1, random_state=1) #Saves into npy files np.save('X_train.npy', X_train) np.save('y_train.npy', y_train) np.save('X_test.npy', X_test) np.save('y_test.npy', y_test) np.save('X_val.npy', X_test) np.save('y_val.npy', y_test) #Turns off pbLoad self.pbLoad.setEnabled(False) #Turns on other widgets self.set_state(True)

Step 17: Connect clicked() event of pbLoad with load_data() method and put it inside __init__() method:

self.pbLoad.clicked.connect(self.load_data)

Step 18: Define check_file_train() method to check whether or not train file exists:

def check_file_train(self): if path.isfile('X_train.npy'): self.pbLoad.setEnabled(False) self.set_state(True) else: self.pbLoad.setEnabled(True)

Step 19: Invoke check_file_train() method and put it inside __init__() method as shown in line 10:

1 2 3 4 5 6 7 8 9 10 | def __init__(self): QMainWindow.__init__(self) loadUi("gui_plate.ui",self) self.setWindowTitle("GUI Demo of Detecting License Plate") self.addToolBar(NavigationToolbar(self.widgetPlot.canvas, self)) self.set_state(False) self.initialize() self.pbLoad.clicked.connect(self.load_data) self.check_file_train() |

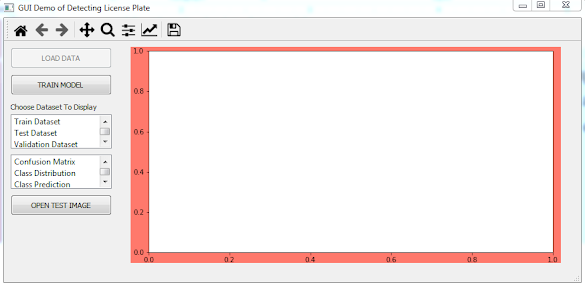

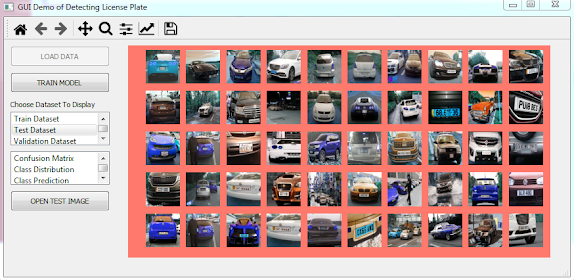

Step 20: Run detect_plate_gui.py and click LOAD DATA. You will find training, test, and validation data files in the working directory. Quit application and run it again. You will see that LOAD DATA button is disabled and other widgets are enabled as shown in Figure below.

Step 21: Define show_image() method to display some images in a widget:

def show_image(self,widget,dataX, dataY, imagePerRow,n=50): row = int(n/imagePerRow) for i in range(n): widget.canvas.axis1 = \ widget.canvas.figure.add_subplot(row,imagePerRow,i+1) seed(1) rand = randint(0, 20) ny = dataY[rand+i]*255 image = \ cv2.rectangle(dataX[rand+i],(int(ny[0]),\ int(ny[1])),(int(ny[2]),int(ny[3])),(0, 255, 0)) widget.canvas.axis1.clear() widget.canvas.axis1.set_xticks([]) widget.canvas.axis1.set_yticks([]) widget.canvas.axis1.imshow((image*255).astype('uint8')) widget.canvas.axis1.axis('off') #plt.tight_layout() widget.canvas.draw()

Step 22: Define choose_data() method to read selected item of lwData widget and to plot data (training, test, or validation) data according to what user choose:

def choose_data(self): item = self.lwData.currentItem() strList = item.text() if strList == 'Train Dataset': self.widgetPlot.canvas.figure.clf() X_train = np.load('X_train.npy',allow_pickle=True) y_train = np.load('y_train.npy',allow_pickle=True) self.show_image(self.widgetPlot,X_train,y_train,10,50) if strList == 'Test Dataset': self.widgetPlot.canvas.figure.clf() X_test = np.load('X_test.npy',allow_pickle=True) y_test = np.load('y_test.npy',allow_pickle=True) self.show_image(self.widgetPlot,X_test,y_test,10,50) if strList == 'Validation Dataset': self.widgetPlot.canvas.figure.clf() X_val = np.load('X_val.npy',allow_pickle=True) y_val = np.load('y_val.npy',allow_pickle=True) self.show_image(self.widgetPlot,X_val,y_val,10,50)

Step 23: Connect clicked() event of lwData widget to choose_data() method and put it inside __init__() method as shown in line 11:

1 2 3 4 5 6 7 8 9 10 11 | def __init__(self): QMainWindow.__init__(self) loadUi("gui_plate.ui",self) self.setWindowTitle("GUI Demo of Detecting License Plate") self.addToolBar(NavigationToolbar(self.widgetPlot.canvas, self)) self.set_state(False) self.initialize() self.pbLoad.clicked.connect(self.load_data) self.check_file_train() self.lwData.clicked.connect(self.choose_data) |

Step 24: Run detect_plate_gui.py and choose Train Dataset from lwData widget. You will see 50 images of training dataset as shown in Figure below.

Then, choose Test Dataset from lwData widget. You will see 50 images of test dataset as shown in Figure below.

Step 25: Open gui_plate.ui with Qt Designer. Add one more Widget from Containers panel onto upper right-hand of the form. Set its objectName property as widgetAnalysis. Promote the widget to analysis_class.

Step 26: Right click on both widgets and promote the widget. Set Promoted class name as analysis_class. Write a new Python script and save it as analysis_class.py:

#analysis_class.py from PyQt5.QtWidgets import* from matplotlib.backends.backend_qt5agg import FigureCanvas from matplotlib.figure import Figure class analysis_class(QWidget): def __init__(self, parent = None): QWidget.__init__(self, parent) self.canvas = FigureCanvas(Figure()) vertical_layout = QVBoxLayout() vertical_layout.addWidget(self.canvas) self.canvas.axis1 = self.canvas.figure.add_subplot(111) self.canvas.figure.set_facecolor("xkcd:chartreuse") self.setLayout(vertical_layout)

Step 27: Define plot_histogram() method to display distribution of number of images in every set (training, test, and validation):

def plot_histogram(self,widget): widget.canvas.axis1.clear() #Loads npy files y_train = np.load('y_train.npy',allow_pickle=True) y_val = np.load('y_val.npy',allow_pickle=True) y_test = np.load('y_test.npy',allow_pickle=True) y = dict() y[0] = [] for size in (y_train, y_val, y_test): y[0].append(size.shape[0]) x=['Train Set', 'Validation Set', 'Test Set'] df = pd.DataFrame({'Set': x, 'Count_All':y[0]}) df1 = df[df.filter(regex='Count_').sum(axis=1).ge(7)] df1 = pd.wide_to_long(df1, stubnames=['Count'], \ i='Set', j='Image', sep='_', suffix='.*') g=sns.barplot(x='Set', y='Count', hue='Image', \ ax=widget.canvas.axis1, data=df1.reset_index(), \ palette=['tomato']) g.legend_.remove() widget.canvas.axis1.set_title('Count of images in each set') widget.canvas.axis1.grid() widget.canvas.draw()

Step 28: Define choose_plot() method to read selected item in lwPlot widget:

def choose_plot(self): item = self.lwPlot.currentItem() strList = item.text() if strList == 'Class Distribution': self.plot_histogram(self.widgetAnalysis)

Step 29: Connect clicked() event of lwPlot widget to choose_plot() method and put it inside __init__() method as shown in line 12:

1 2 3 4 5 6 7 8 9 10 11 12 | def __init__(self): QMainWindow.__init__(self) loadUi("gui_plate.ui",self) self.setWindowTitle("GUI Demo of Detecting License Plate") self.addToolBar(NavigationToolbar(self.widgetPlot.canvas, self)) self.set_state(False) self.initialize() self.pbLoad.clicked.connect(self.load_data) self.check_file_train() self.lwData.clicked.connect(self.choose_data) self.lwPlot.clicked.connect(self.choose_plot) |

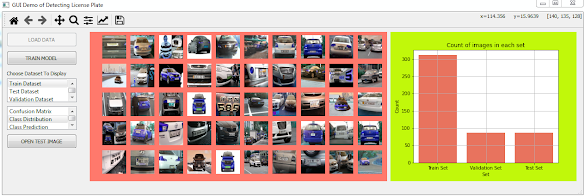

Step 30: Run detect_plate_gui.py and then choose Class Distribution from lwPlot widget, you will see distribution of number of images in every set (training, test, and validation) displayed on widgetAnalysis as shown in Figure below:

Step 31: Define train_model() method to create and train VGG16 model. It also saves the trained model into h5 file and its history into npy file:

def train_model(self): # Creates the model model = Sequential() model.add(VGG16(weights="imagenet", include_top=False, \ input_shape=(self.IMAGE_SIZE, self.IMAGE_SIZE, 3))) model.add(Flatten()) model.add(Dense(128, activation="relu")) model.add(Dense(128, activation="relu")) model.add(Dense(64, activation="relu")) model.add(Dense(4, activation="sigmoid")) model.layers[-6].trainable = False model.summary() model.compile(loss='mean_squared_error', optimizer='adam', \ metrics=['accuracy']) #Loads npy files X_train = np.load('X_train.npy',allow_pickle=True) y_train = np.load('y_train.npy',allow_pickle=True) X_test = np.load('X_test.npy',allow_pickle=True) y_test = np.load('y_test.npy',allow_pickle=True) train = model.fit(X_train, y_train, \ validation_data=(X_test, y_test), \ epochs=self.NUM_EPOCHS, batch_size=self.batchsize, \ verbose=1) #Saves the model model.save('plate_cnn.h5') #Saves history into npy file history_dict = train.history np.save('history_plate_cnn.npy', history_dict) self.pbTrain.setEnabled(False)

Step 32: Connect clicked() event of pbTrain widget to train_model() method and put it inside __init__() method.

Step 33: Define check_file_model() method to check whether or not train file exists:

def check_file_model(self): if path.isfile('plate_cnn.h5'): self.pbTrain.setEnabled(False) else: self.pbTrain.setEnabled(True)

Step 34: Invoke check_file_model() method in __init__() method as shown in line 14:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | def __init__(self): QMainWindow.__init__(self) loadUi("gui_plate.ui",self) self.setWindowTitle("GUI Demo of Detecting License Plate") self.addToolBar(NavigationToolbar(self.widgetPlot.canvas, self)) self.set_state(False) self.initialize() self.pbLoad.clicked.connect(self.load_data) self.check_file_train() self.lwData.clicked.connect(self.choose_data) self.lwPlot.clicked.connect(self.choose_plot) self.pbTrain.clicked.connect(self.train_model) self.check_file_model() |

Step 35: Run detect_plate_gui.py and click on TRAIN MODEL button. Quit application and run it again. You will see that the button is already disabled.

Step 36: Open gui_plate.ui with Qt Designer. Add two more items in lwPlot widget: Loss Graph and Accuracy Graph.

Step 37: Define plot_loss_acc() method to plot accuracy or loss graph:

def plot_loss_acc(self,widget,strPlot): # Loads history history = \ np.load('history_plate_cnn.npy',allow_pickle=True).item() train_loss = history['loss'] val_loss = history['val_loss'] train_acc = history['accuracy'] val_acc = history['val_accuracy'] if strPlot == 'Loss': widget.canvas.axis1.clear() widget.canvas.axis1.plot(train_loss, \ label='Training Loss',linewidth=3.0) widget.canvas.axis1.plot(val_loss, \ label='Validation Loss',linewidth=3.0) widget.canvas.axis1.set_title('Loss') widget.canvas.axis1.set_xlabel('Epoch') widget.canvas.axis1.grid() widget.canvas.axis1.legend() widget.canvas.draw() else: widget.canvas.axis1.clear() widget.canvas.axis1.plot(train_acc, \ label='Training Accuracy',linewidth=3.0) widget.canvas.axis1.plot(val_acc, \ label='Validation Accuracy',linewidth=3.0) widget.canvas.axis1.set_title('Accuracy') widget.canvas.axis1.set_xlabel('Epoch') widget.canvas.axis1.grid() widget.canvas.axis1.legend() widget.canvas.draw()

Step 38: Add this code to the end of choose_plot() method:

if strList == 'Accuracy Graph': self.plot_loss_acc(self.widgetAnalysis,'Acc') if strList == 'Loss Graph': self.plot_loss_acc(self.widgetAnalysis,'Loss')

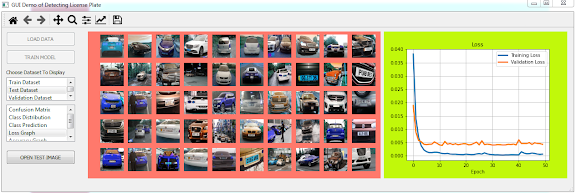

Step 39: Run detect_plate_gui.py and choose Loss Graph from lwPlot. You will see loss versus epoch graph displayed on widgetAnalysis as shown in Figure below.

Then, choose Accuracy Graph from lwPlot. You will see accuracy versus epoch graph displayed on widgetAnalysis as shown in Figure below.

Step 40: Define show_prediction() method to display some images on a widget and detect license plate in each one of them:

def show_prediction(self,widget1,widget2,dataX, dataY, imagePerRow,n=10): row = int(n/imagePerRow) for i in range(n): widget1.canvas.axis1 = \ widget1.canvas.figure.add_subplot(row,imagePerRow,i+1) seed(1) rand = randint(0, 20) ny = dataY[rand+i]*255 image = cv2.rectangle(dataX[rand+i],\ (int(ny[0]),int(ny[1])),(int(ny[2]),int(ny[3])),\ (0, 255, 0),3) cv2.putText(image, 'plate', (int(ny[2]),int(ny[3])-10),\ cv2.FONT_HERSHEY_SIMPLEX, 0.9, (0,255,0), 2) widget1.canvas.axis1.clear() widget1.canvas.axis1.set_xticks([]) widget1.canvas.axis1.set_yticks([]) image = np.clip(image, 0, 1) widget1.canvas.axis1.imshow(image) widget1.canvas.axis1.axis('off') #Displays cropped license plate widget2.canvas.axis1 = \ widget2.canvas.figure.add_subplot(row,imagePerRow,i+1) img = dataX[rand+i] cropped_image = img[int(ny[3]):int(ny[1]), \ int(ny[2]):int(ny[0])] widget2.canvas.axis1.clear() widget2.canvas.axis1.set_xticks([]) widget2.canvas.axis1.set_yticks([]) cropped_image = np.clip(cropped_image, 0, 1) widget2.canvas.axis1.imshow(cropped_image) widget2.canvas.axis1.axis('off') widget1.canvas.draw() widget2.canvas.draw()

Step 41: Add this code to the end of choose_plot() method:

if strList == 'Class Prediction': self.widgetPrediction.canvas.figure.clf() self.widgetPlot.canvas.figure.clf() #Loads test dataset X_test = np.load('X_test.npy',allow_pickle=True) #Loads model model = load_model('plate_cnn.h5') y_cnn = model.predict(X_test) self.show_prediction(self.widgetPrediction,\ self.widgetPlot,X_test,y_cnn,6,12)

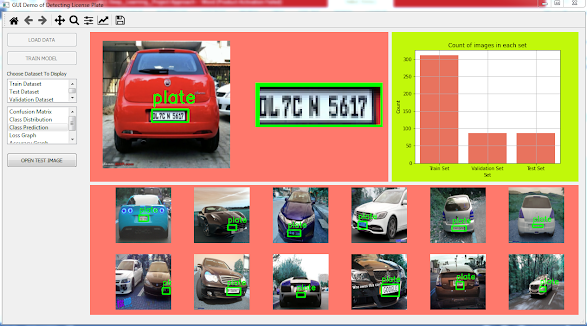

Step 42: Run detect_plate_gui.py and choose Class Prediction from lwPlot widget. You will see detected license plate in 12 random test images as shown in Figure below.

Step 43: Define open_image() method to open file dialog and read chosen image:

def open_image(self): fname = QFileDialog.getOpenFileName(self, 'Open file', 'd:\\',"Image Files (*.jpg *.gif *.bmp *.png)") #pixmap = QPixmap(fname[0]) img = cv2.imread(fname[0], cv2.IMREAD_COLOR) return img

Step 44: Define check_plate() method to detect any license plate in an image and display it on a widget:

def check_plate(self, image, widget): #Loads model model = load_model('plate_cnn.h5') image = cv2.resize(image, (self.IMAGE_SIZE, \ self.IMAGE_SIZE), interpolation = cv2.INTER_AREA) img_scaled = image/255.0 reshape = np.reshape(img_scaled,\ (1,self.IMAGE_SIZE,self.IMAGE_SIZE,3)) xtest = reshape y_cnn = model.predict(xtest) pred = y_cnn*255 ny = np.array(pred[0]) image = cv2.rectangle(image,\ (int(ny[0]),int(ny[1])),(int(ny[2]),int(ny[3])),\ (0, 255, 0),2) cv2.putText(image, 'plate', (int(ny[2]),int(ny[3])-10),\ cv2.FONT_HERSHEY_SIMPLEX, 0.9, (0,255,0), 2) widget.canvas.axis1 = widget.canvas.figure.add_subplot(121) widget.canvas.axis1.clear() widget.canvas.axis1.set_xticks([]) widget.canvas.axis1.set_yticks([]) widget.canvas.axis1.imshow(cv2.cvtColor(image, \ cv2.COLOR_BGR2RGB)) widget.canvas.axis1.axis('off') widget.canvas.draw() widget.canvas.axis1 = widget.canvas.figure.add_subplot(122) cropped_image = image[int(ny[3]):int(ny[1]), \ int(ny[2]):int(ny[0])] widget.canvas.axis1.clear() widget.canvas.axis1.set_xticks([]) widget.canvas.axis1.set_yticks([]) widget.canvas.axis1.imshow(cv2.cvtColor(cropped_image, \ cv2.COLOR_BGR2RGB)) widget.canvas.axis1.axis('off') widget.canvas.draw()

Step 45: Define detect_plate() to invoke open_image() and check_plate() methods:

def detect_plate(self): image = self.open_image() self.widgetPlot.canvas.figure.clf() self.check_plate(image,self.widgetPlot)

Step 46: Connect clicked() event of pbOpen widget to detect_plate() method and put it inside __init__() method as shown in line 15:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | def __init__(self): QMainWindow.__init__(self) loadUi("gui_plate.ui",self) self.setWindowTitle("GUI Demo of Detecting License Plate") self.addToolBar(NavigationToolbar(self.widgetPlot.canvas, self)) self.set_state(False) self.initialize() self.pbLoad.clicked.connect(self.load_data) self.check_file_train() self.lwData.clicked.connect(self.choose_data) self.lwPlot.clicked.connect(self.choose_plot) self.pbTrain.clicked.connect(self.train_model) self.check_file_model() self.pbOpen.clicked.connect(self.detect_plate) |

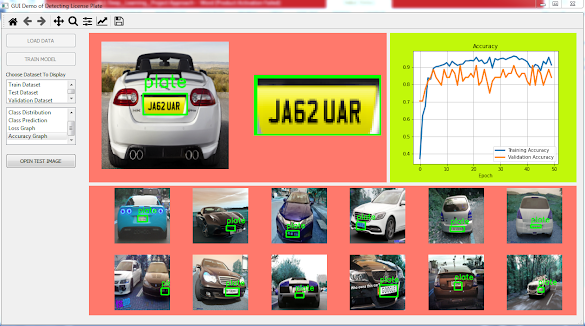

Step 47: Run detect_plate_gui.py and OPEN TEST IMAGE button. Choose an image that contains license plate. The detected license plate will be displayed on widgetPlot as shown in Figure below.

Choose two other images that contains license plate. The detected license plate will be displayed on widgetPlot as shown in two Figures below.

Following is the full version of detect_plate_gui.py:

#detect_plate_gui.py from PyQt5.QtWidgets import * from PyQt5.uic import loadUi from matplotlib.backends.backend_qt5agg import (NavigationToolbar2QT as NavigationToolbar) from matplotlib.colors import ListedColormap import seaborn as sns import cv2 import os from os import path import glob from lxml import etree from sklearn.model_selection import train_test_split from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense, Flatten from tensorflow.keras.applications.vgg16 import VGG16 from tensorflow.keras.models import load_model import pandas as pd import numpy as np from matplotlib import pyplot as plt from random import seed from random import randint class DemoGUI_DetectPlate(QMainWindow): def __init__(self): QMainWindow.__init__(self) loadUi("gui_plate.ui",self) self.setWindowTitle("GUI Demo of Detecting License Plate") self.addToolBar(NavigationToolbar(self.widgetPlot.canvas, self)) self.set_state(False) self.initialize() self.pbLoad.clicked.connect(self.load_data) self.check_file_train() self.lwData.clicked.connect(self.choose_data) self.lwPlot.clicked.connect(self.choose_plot) self.pbTrain.clicked.connect(self.train_model) self.check_file_model() self.pbOpen.clicked.connect(self.detect_plate) def set_state(self, state): self.pbTrain.setEnabled(state) self.pbOpen.setEnabled(state) self.lwData.setEnabled(state) self.lwPlot.setEnabled(state) self.widgetPlot.setEnabled(state) def initialize(self): self.batchsize = 32 self.NUM_EPOCHS = 50 self.curr_path = os.getcwd() self.IMAGE_SIZE = 200 img_dir = self.curr_path + "/images" # Enter Directory of all images data_path = os.path.join(img_dir,'*g') self.files = glob.glob(data_path) def generate_X(self,files): #Sorts the images in alphabetical order to match them to #the xml files containing the annotations of the bounding boxes files.sort() X=[] for f1 in files: img = cv2.imread(f1) img = cv2.resize(img, (self.IMAGE_SIZE,self.IMAGE_SIZE)) X.append(np.array(img)) return X def generate_y(self): path = self.curr_path + '/annotations' text_files = [self.curr_path + '/annotations/'+f for f in\ sorted(os.listdir(path))] y=[] for i in text_files: y.append(self.resize_annotation(i)) return y def resize_annotation(self, f): tree = etree.parse(f) for dim in tree.xpath("size"): width = int(dim.xpath("width")[0].text) height = int(dim.xpath("height")[0].text) for dim in tree.xpath("object/bndbox"): xmin = int(dim.xpath("xmin")[0].text)/(width/self.IMAGE_SIZE) ymin = int(dim.xpath("ymin")[0].text)/(height/self.IMAGE_SIZE) xmax = int(dim.xpath("xmax")[0].text)/(width/self.IMAGE_SIZE) ymax = int(dim.xpath("ymax")[0].text)/(height/self.IMAGE_SIZE) return [int(xmax), int(ymax), int(xmin), int(ymin)] def load_data(self): X = self.generate_X(self.files) y = self.generate_y() #Transforms into array X=np.array(X) y=np.array(y) #Normalisation X = X / 255 y = y / 255 X_train, X_test, y_train, y_test = train_test_split(X, y, \ test_size=0.2, random_state=1) X_train, X_val, y_train, y_val = train_test_split(X_train, \ y_train, test_size=0.1, random_state=1) #Saves into npy files np.save('X_train.npy', X_train) np.save('y_train.npy', y_train) np.save('X_test.npy', X_test) np.save('y_test.npy', y_test) np.save('X_val.npy', X_test) np.save('y_val.npy', y_test) #Turns off pbLoad self.pbLoad.setEnabled(False) #Turns on other widgets self.set_state(True) def check_file_train(self): if path.isfile('X_train.npy'): self.pbLoad.setEnabled(False) self.set_state(True) else: self.pbLoad.setEnabled(True) def choose_data(self): item = self.lwData.currentItem() strList = item.text() if strList == 'Train Dataset': self.widgetPlot.canvas.figure.clf() X_train = np.load('X_train.npy',allow_pickle=True) y_train = np.load('y_train.npy',allow_pickle=True) self.show_image(self.widgetPlot,X_train,y_train,10,50) if strList == 'Test Dataset': self.widgetPlot.canvas.figure.clf() X_test = np.load('X_test.npy',allow_pickle=True) y_test = np.load('y_test.npy',allow_pickle=True) self.show_image(self.widgetPlot,X_test,y_test,10,50) if strList == 'Validation Dataset': self.widgetPlot.canvas.figure.clf() X_val = np.load('X_val.npy',allow_pickle=True) y_val = np.load('y_val.npy',allow_pickle=True) self.show_image(self.widgetPlot,X_val,y_val,10,50) def show_image(self,widget,dataX, dataY, imagePerRow,n=50): row = int(n/imagePerRow) for i in range(n): widget.canvas.axis1 = \ widget.canvas.figure.add_subplot(row,imagePerRow,i+1) seed(1) rand = randint(0, 20) ny = dataY[rand+i]*255 image = cv2.rectangle(dataX[rand+i],(int(ny[0]),int(ny[1])),\ (int(ny[2]),int(ny[3])),(0, 255, 0)) widget.canvas.axis1.clear() widget.canvas.axis1.set_xticks([]) widget.canvas.axis1.set_yticks([]) widget.canvas.axis1.imshow((image*255).astype('uint8')) widget.canvas.axis1.axis('off') #plt.tight_layout() widget.canvas.draw() def plot_histogram(self,widget): widget.canvas.axis1.clear() #Loads npy files y_train = np.load('y_train.npy',allow_pickle=True) y_val = np.load('y_val.npy',allow_pickle=True) y_test = np.load('y_test.npy',allow_pickle=True) y = dict() y[0] = [] for size in (y_train, y_val, y_test): y[0].append(size.shape[0]) x=['Train Set', 'Validation Set', 'Test Set'] df = pd.DataFrame({'Set': x, 'Count_All':y[0]}) df1 = df[df.filter(regex='Count_').sum(axis=1).ge(7)] df1 = pd.wide_to_long(df1, stubnames=['Count'], \ i='Set', j='Image', sep='_', suffix='.*') g=sns.barplot(x='Set', y='Count', hue='Image', \ ax=widget.canvas.axis1, data=df1.reset_index(), \ palette=['tomato']) g.legend_.remove() widget.canvas.axis1.set_title('Count of images in each set') widget.canvas.axis1.grid() widget.canvas.draw() def choose_plot(self): item = self.lwPlot.currentItem() strList = item.text() if strList == 'Class Distribution': self.plot_histogram(self.widgetAnalysis) if strList == 'Accuracy Graph': self.plot_loss_acc(self.widgetAnalysis,'Acc') if strList == 'Loss Graph': self.plot_loss_acc(self.widgetAnalysis,'Loss') if strList == 'Class Prediction': self.widgetPrediction.canvas.figure.clf() self.widgetPlot.canvas.figure.clf() #Loads test dataset X_test = np.load('X_test.npy',allow_pickle=True) #Loads model model = load_model('plate_cnn.h5') y_cnn = model.predict(X_test) self.show_prediction(self.widgetPrediction,self.widgetPlot,\ X_test,y_cnn,6,12) def train_model(self): # Create the model model = Sequential() model.add(VGG16(weights="imagenet", include_top=False, \ input_shape=(self.IMAGE_SIZE, self.IMAGE_SIZE, 3))) model.add(Flatten()) model.add(Dense(128, activation="relu")) model.add(Dense(128, activation="relu")) model.add(Dense(64, activation="relu")) model.add(Dense(4, activation="sigmoid")) model.layers[-6].trainable = False model.summary() model.compile(loss='mean_squared_error', \ optimizer='adam', metrics=['accuracy']) #Loads npy files X_train = np.load('X_train.npy',allow_pickle=True) y_train = np.load('y_train.npy',allow_pickle=True) X_test = np.load('X_test.npy',allow_pickle=True) y_test = np.load('y_test.npy',allow_pickle=True) train = model.fit(X_train, y_train, validation_data=(X_test, \ y_test), epochs=self.NUM_EPOCHS, batch_size=self.batchsize, \ verbose=1) #Saves the model model.save('plate_cnn.h5') #Saves history into npy file history_dict = train.history np.save('history_plate_cnn.npy', history_dict) self.pbTrain.setEnabled(False) def check_file_model(self): if path.isfile('plate_cnn.h5'): self.pbTrain.setEnabled(False) else: self.pbTrain.setEnabled(True) def plot_loss_acc(self,widget,strPlot): # load array history = \ np.load('history_plate_cnn.npy',allow_pickle=True).item() train_loss = history['loss'] val_loss = history['val_loss'] train_acc = history['accuracy'] val_acc = history['val_accuracy'] if strPlot == 'Loss': widget.canvas.axis1.clear() widget.canvas.axis1.plot(train_loss, \ label='Training Loss',linewidth=3.0) widget.canvas.axis1.plot(val_loss, \ label='Validation Loss',linewidth=3.0) widget.canvas.axis1.set_title('Loss') widget.canvas.axis1.set_xlabel('Epoch') widget.canvas.axis1.grid() widget.canvas.axis1.legend() widget.canvas.draw() else: widget.canvas.axis1.clear() widget.canvas.axis1.plot(train_acc, \ label='Training Accuracy',linewidth=3.0) widget.canvas.axis1.plot(val_acc, \ label='Validation Accuracy',linewidth=3.0) widget.canvas.axis1.set_title('Accuracy') widget.canvas.axis1.set_xlabel('Epoch') widget.canvas.axis1.grid() widget.canvas.axis1.legend() widget.canvas.draw() def show_prediction(self,widget1,widget2,dataX, dataY, imagePerRow,n=10): row = int(n/imagePerRow) for i in range(n): widget1.canvas.axis1 = \ widget1.canvas.figure.add_subplot(row,imagePerRow,i+1) seed(1) rand = randint(0, 20) ny = dataY[rand+i]*255 image = cv2.rectangle(dataX[rand+i],(int(ny[0]),\ int(ny[1])),(int(ny[2]),int(ny[3])),(0, 255, 0),3) cv2.putText(image, 'plate', (int(ny[2]),int(ny[3])-10), \ cv2.FONT_HERSHEY_SIMPLEX, 0.9, (0,255,0), 2) widget1.canvas.axis1.clear() widget1.canvas.axis1.set_xticks([]) widget1.canvas.axis1.set_yticks([]) image = np.clip(image, 0, 1) widget1.canvas.axis1.imshow(image) widget1.canvas.axis1.axis('off') #Displays cropped license plate widget2.canvas.axis1 = \ widget2.canvas.figure.add_subplot(row,imagePerRow,i+1) img = dataX[rand+i] cropped_image = img[int(ny[3]):int(ny[1]), int(ny[2]):int(ny[0])] widget2.canvas.axis1.clear() widget2.canvas.axis1.set_xticks([]) widget2.canvas.axis1.set_yticks([]) cropped_image = np.clip(cropped_image, 0, 1) widget2.canvas.axis1.imshow(cropped_image) widget2.canvas.axis1.axis('off') widget1.canvas.draw() widget2.canvas.draw() def open_image(self): fname = QFileDialog.getOpenFileName(self, 'Open file', 'd:\\',"Image Files (*.jpg *.gif *.bmp *.png)") #pixmap = QPixmap(fname[0]) img = cv2.imread(fname[0], cv2.IMREAD_COLOR) return img def detect_plate(self): image = self.open_image() self.widgetPlot.canvas.figure.clf() self.check_plate(image,self.widgetPlot) def check_plate(self, image, widget): #Loads model model = load_model('plate_cnn.h5') image = cv2.resize(image, (self.IMAGE_SIZE, self.IMAGE_SIZE), \ interpolation = cv2.INTER_AREA) img_scaled = image/255.0 reshape = np.reshape(img_scaled,(1,self.IMAGE_SIZE,self.IMAGE_SIZE,3)) xtest = reshape y_cnn = model.predict(xtest) pred = y_cnn*255 ny = np.array(pred[0]) image = cv2.rectangle(image,(int(ny[0]),int(ny[1])),\ (int(ny[2]),int(ny[3])),(0, 255, 0),2) cv2.putText(image, 'plate', (int(ny[2]),int(ny[3])-10), \ cv2.FONT_HERSHEY_SIMPLEX, 0.9, (0,255,0), 2) widget.canvas.axis1 = widget.canvas.figure.add_subplot(121) widget.canvas.axis1.clear() widget.canvas.axis1.set_xticks([]) widget.canvas.axis1.set_yticks([]) widget.canvas.axis1.imshow(cv2.cvtColor(image, cv2.COLOR_BGR2RGB)) widget.canvas.axis1.axis('off') widget.canvas.draw() widget.canvas.axis1 = widget.canvas.figure.add_subplot(122) cropped_image = image[int(ny[3]):int(ny[1]), int(ny[2]):int(ny[0])] widget.canvas.axis1.clear() widget.canvas.axis1.set_xticks([]) widget.canvas.axis1.set_yticks([]) widget.canvas.axis1.imshow(cv2.cvtColor(cropped_image, \ cv2.COLOR_BGR2RGB)) widget.canvas.axis1.axis('off') widget.canvas.draw() if __name__ == '__main__': import sys app = QApplication(sys.argv) ex = DemoGUI_DetectPlate() ex.show() sys.exit(app.exec_())