This content is powered by Balige Publishing. Visit this link (collaboration with Rismon Hasiholan Sianipar) PART 1 PART 2

Run sift2.py and see the result as shown in Figure below.

Modify initialization() to involve gbSIFT widget as follows:

Define a new method, sift_detection(), to implement SIFT as follows:

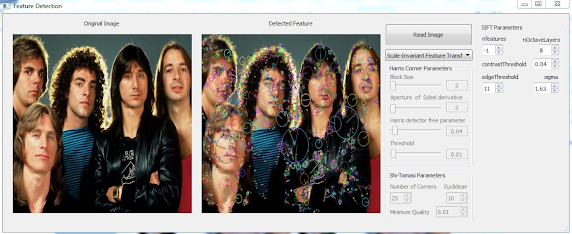

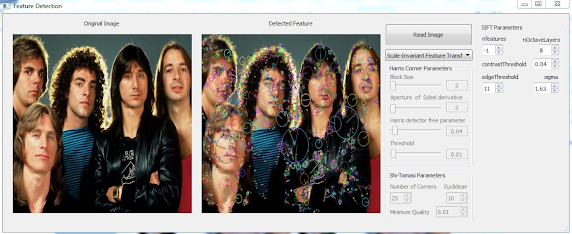

Run feature_detection.py, open an image, and choose Scale-Invariant Feature Transform (SIFT) from combo box. Change the parameters and the result is shown in both figures below.

In this tutorial, you will learn how to use OpenCV, NumPy library and other libraries to perform feature extraction with Python GUI (PyQt). The feature detection techniques used in this chapter are Harris Corner Detection, Shi-Tomasi Corner Detector, Scale-Invariant Feature Transform (SIFT), Speeded-Up Robust Features (SURF), Features from Accelerated Segment Test (FAST), Binary Robust Independent Elementary Features (BRIEF), and Oriented FAST and Rotated BRIEF (ORB).

Tutorial Steps To Detect Features Using Scale-Invariant Feature Transform (SIFT)

Run sift.py and see the result as shown in Figure below.

In OpenCV, sift.detect() function finds the keypoint in the images. You can pass a mask if you want to search only a part of image. Each keypoint is a special structure which has many attributes like its (x,y) coordinates, size of the meaningful neighbourhood, angle which specifies its orientation, response that specifies strength of keypoints etc.

So now let's see SIFT functionalities available in OpenCV. Let's start with keypoint detection and draw them. First we have to construct a SIFT object. We can pass different parameters to it which are optional as follows:

#sift.py import numpy as np import cv2 as cv img = cv.imread('chessboard.png') gray= cv.cvtColor(img,cv.COLOR_BGR2GRAY) sift = cv.SIFT_create(nfeatures = 0, nOctaveLayers = 3,\ contrastThreshold = 0.04,edgeThreshold = 10,sigma = 1.6) kp = sift.detect(gray,None) img=cv.drawKeypoints(gray,kp,img) cv.imshow('SIFT',img) if cv.waitKey(0) & 0xff == 27: cv.destroyAllWindows()

Run sift.py and see the result as shown in Figure below.

OpenCV also provides cv.drawKeyPoints() function which draws the small circles on the locations of keypoints.

If you pass a flag, cv.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS to it, it will draw a circle with size of keypoint and it will even show its orientation. See the example as follows:

#sift2.py import numpy as np import cv2 as cv img = cv.imread('chessboard.png') gray= cv.cvtColor(img,cv.COLOR_BGR2GRAY) sift = cv.SIFT_create(nfeatures = 0, nOctaveLayers = 3, \ contrastThreshold = 0.04, edgeThreshold = 10, sigma = 1.6) kp = sift.detect(gray,None) img=cv.drawKeypoints(gray,kp,img,\ flags=cv.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS) cv.imshow('SIFT',img) if cv.waitKey(0) & 0xff == 27: cv.destroyAllWindows()

Run sift2.py and see the result as shown in Figure below.

Now, you will modify feature_detection.ui to implement Scale-Invariant Feature Transform (SIFT). Add a Group Box widget and set its objectName property as gbSIFT.

Inside the group box, put three Spin Box widgets. Set their objectName properties to sbFeature, sbOctave, and sbEdge. Set value property of sbFeature to 0, that of sbOctave to 3, and that of sbEdge to 10. Set minimum property of sbFeature to -10, that of sbOctave to 1, and that of sbEdge to 0. Set maximum property of sbFeature to 5, that of sbOctave to 100, and that of sbEdge to 100.

Then, add two Double Spin Box widgets. Set its objectName property as dsbContrast and dsbSigma. Set value property of dsbContrast to 0.04 and that of dsbSigma to 1.60. Set maximum property of dsbContrast to 1.00 and that of dsbSigma to 10.00. Set singleStep property of both Double Spin Box widgets to 0.01.

Modify initialization() to involve gbSIFT widget as follows:

def initialization(self,state): self.cboFeature.setEnabled(state) self.gbHarris.setEnabled(state) self.gbShiTomasi.setEnabled(state) self.gbSIFT.setEnabled(state)

Define a new method, sift_detection(), to implement SIFT as follows:

def sift_detection(self): self.test_im = self.img.copy() nfeatures = self.sbFeature.value() nOctaveLayers = self.sbOctave.value() contrastThreshold = self.dsbContrast.value() edgeThreshold = self.sbEdge.value() sigma = self.dsbSigma.value() gray= cv2.cvtColor(self.test_im,cv2.COLOR_BGR2GRAY) sift = cv2.SIFT_create(nfeatures, nOctaveLayers, \ contrastThreshold, edgeThreshold, sigma) kp = sift.detect(gray,None) self.test_im=cv2.drawKeypoints(self.test_im,\ kp,self.test_im,\ flags=cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS) cv2.cvtColor(self.test_im, cv2.COLOR_BGR2RGB, self.test_im) self.display_image(self.test_im, self.labelResult)

Modify choose_feature() so that when user choose Scale-Invariant Feature Transform (SIFT) from combo box, it will invoke sift_detection() as follows:

def choose_feature(self): strCB = self.cboFeature.currentText() if strCB == 'Harris Corner Detection': self.gbHarris.setEnabled(True) self.gbShiTomasi.setEnabled(False) self.gbSIFT.setEnabled(False) self.harris_detection() if strCB == 'Shi-Tomasi Corner Detector': self.gbHarris.setEnabled(False) self.gbShiTomasi.setEnabled(True) self.gbSIFT.setEnabled(False) self.shi_tomasi_detection() if strCB == 'Scale-Invariant Feature Transform (SIFT)': self.gbHarris.setEnabled(False) self.gbShiTomasi.setEnabled(False) self.gbSIFT.setEnabled(True) self.sift_detection()

Connect valueChanged() signal of the sbFeature, sbOctave, dsbContrast, sbEdge, and dsbSigma to sift_detection() method and put them inside __init__() method as follows:

def __init__(self): QMainWindow.__init__(self) loadUi("feature_detection.ui",self) self.setWindowTitle("Feature Detection") self.pbReadImage.clicked.connect(self.read_image) self.initialization(False) self.cboFeature.currentIndexChanged.connect(self.choose_feature) self.hsBlockSize.valueChanged.connect(self.set_hsBlockSize) self.hsKSize.valueChanged.connect(self.set_hsKSize) self.hsK.valueChanged.connect(self.set_hsK) self.hsThreshold.valueChanged.connect(self.set_hsThreshold) self.sbCorner.valueChanged.connect(self.shi_tomasi_detection) self.sbEuclidean.valueChanged.connect(self.shi_tomasi_detection) self.dsbQuality.valueChanged.connect(self.shi_tomasi_detection) self.sbFeature.valueChanged.connect(self.sift_detection) self.sbOctave.valueChanged.connect(self.sift_detection) self.dsbContrast.valueChanged.connect(self.sift_detection) self.sbEdge.valueChanged.connect(self.sift_detection) self.dsbSigma.valueChanged.connect(self.sift_detection)

Run feature_detection.py, open an image, and choose Scale-Invariant Feature Transform (SIFT) from combo box. Change the parameters and the result is shown in both figures below.

Below is the full script of feature_detection.py so far:

#feature_detection.pyimport sysimport cv2import numpy as npfrom PyQt5.QtWidgets import*from PyQt5 import QtGui, QtCorefrom PyQt5.uic import loadUifrom matplotlib.backends.backend_qt5agg import (NavigationToolbar2QT as NavigationToolbar)from PyQt5.QtWidgets import QDialog, QFileDialogfrom PyQt5.QtGui import QIcon, QPixmap, QImagefrom PyQt5.uic import loadUiclass FormFeatureDetection(QMainWindow):def __init__(self):QMainWindow.__init__(self)loadUi("feature_detection.ui",self)self.setWindowTitle("Feature Detection")self.pbReadImage.clicked.connect(self.read_image)self.initialization(False)self.cboFeature.currentIndexChanged.connect(self.choose_feature)self.hsBlockSize.valueChanged.connect(self.set_hsBlockSize)self.hsKSize.valueChanged.connect(self.set_hsKSize)self.hsK.valueChanged.connect(self.set_hsK)self.hsThreshold.valueChanged.connect(self.set_hsThreshold)self.sbCorner.valueChanged.connect(self.shi_tomasi_detection)self.sbEuclidean.valueChanged.connect(self.shi_tomasi_detection)self.dsbQuality.valueChanged.connect(self.shi_tomasi_detection)self.sbFeature.valueChanged.connect(self.sift_detection)self.sbOctave.valueChanged.connect(self.sift_detection)self.dsbContrast.valueChanged.connect(self.sift_detection)self.sbEdge.valueChanged.connect(self.sift_detection)self.dsbSigma.valueChanged.connect(self.sift_detection)def read_image(self):self.fname = QFileDialog.getOpenFileName(self, 'Open file', \'d:\\',"Image Files (*.jpg *.gif *.bmp *.png)")self.pixmap = QPixmap(self.fname[0])self.labelImage.setPixmap(self.pixmap)self.labelImage.setScaledContents(True)self.img = cv2.imread(self.fname[0], cv2.IMREAD_COLOR)self.cboFeature.setEnabled(True)def initialization(self,state):self.cboFeature.setEnabled(state)self.gbHarris.setEnabled(state)self.gbShiTomasi.setEnabled(state)self.gbSIFT.setEnabled(state)def set_hsBlockSize(self, value):self.leBlockSize.setText(str(value))self.harris_detection()def set_hsKSize(self, value):self.leKSize.setText(str(value))self.harris_detection()def set_hsK(self, value):self.leK.setText(str(round((value/100),2)))self.harris_detection()def set_hsThreshold(self, value):self.leThreshold.setText(str(round((value/100),2)))self.harris_detection()def choose_feature(self):strCB = self.cboFeature.currentText()if strCB == 'Harris Corner Detection':self.gbHarris.setEnabled(True)self.gbShiTomasi.setEnabled(False)self.gbSIFT.setEnabled(False)self.harris_detection()if strCB == 'Shi-Tomasi Corner Detector':self.gbHarris.setEnabled(False)self.gbShiTomasi.setEnabled(True)self.gbSIFT.setEnabled(False)self.shi_tomasi_detection()if strCB == 'Scale-Invariant Feature Transform (SIFT)':self.gbHarris.setEnabled(False)self.gbShiTomasi.setEnabled(False)self.gbSIFT.setEnabled(True)self.sift_detection()def harris_detection(self):self.test_im = self.img.copy()gray = cv2.cvtColor(self.test_im,cv2.COLOR_BGR2GRAY)gray = np.float32(gray)blockSize = int(self.leBlockSize.text())kSize = int(self.leKSize.text())K = float(self.leK.text())dst = cv2.cornerHarris(gray,blockSize,kSize,K)#dilated for marking the cornersdst = cv2.dilate(dst,None)# Threshold for an optimal value, it may vary depending on the image.Thresh = float(self.leThreshold.text())self.test_im[dst>Thresh*dst.max()]=[0,0,255]cv2.cvtColor(self.test_im, cv2.COLOR_BGR2RGB, self.test_im)self.display_image(self.test_im, self.labelResult)def display_image(self, img, label):height, width, channel = img.shapebytesPerLine = 3 * widthqImg = QImage(img, width, height, \bytesPerLine, QImage.Format_RGB888)pixmap = QPixmap.fromImage(qImg)label.setPixmap(pixmap)label.setScaledContents(True)def shi_tomasi_detection(self):self.test_im = self.img.copy()number_corners = self.sbCorner.value()euclidean_dist = self.sbEuclidean.value()min_quality = self.dsbQuality.value()gray = cv2.cvtColor(self.test_im,cv2.COLOR_BGR2GRAY)corners = cv2.goodFeaturesToTrack(gray,number_corners,\min_quality,euclidean_dist)corners = np.int0(corners)for i in corners:x,y = i.ravel()cv2.circle(self.test_im,(x,y),5,[0,255,0],-1)cv2.cvtColor(self.test_im, cv2.COLOR_BGR2RGB, self.test_im)self.display_image(self.test_im, self.labelResult)def sift_detection(self):self.test_im = self.img.copy()nfeatures = self.sbFeature.value()nOctaveLayers = self.sbOctave.value()contrastThreshold = self.dsbContrast.value()edgeThreshold = self.sbEdge.value()sigma = self.dsbSigma.value()gray= cv2.cvtColor(self.test_im,cv2.COLOR_BGR2GRAY)sift = cv2.SIFT_create(nfeatures, nOctaveLayers, \contrastThreshold, edgeThreshold, sigma)kp = sift.detect(gray,None)self.test_im=cv2.drawKeypoints(self.test_im,kp,\self.test_im,flags=cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)cv2.cvtColor(self.test_im, cv2.COLOR_BGR2RGB, self.test_im)self.display_image(self.test_im, self.labelResult)if __name__=="__main__":app = QApplication(sys.argv)w = FormFeatureDetection()w.show()sys.exit(app.exec_())

No comments:

Post a Comment